In today’s rapidly advancing technological landscape, the demand for efficient and effective artificial intelligence solutions has never been higher. One technology that has emerged as a game-changer in overcoming AI inference workload challenges is Edge AI. By bringing intelligence closer to the source of data, Edge AI offers a powerful solution to five key challenges that have long hindered the seamless deployment of AI models in real-world scenarios. Let’s dive into how Edge AI is revolutionizing the field of artificial intelligence and paving the way for a future where smart devices can truly think on their own.

Table of Contents

- Overview of AI Inference Workload Challenges

- Benefits of Implementing Edge AI for AI Inference

- Optimizing Hardware and Software for Efficient AI Inference

- Real-world Applications and Case Studies of Edge AI Success Stories

- Q&A

- Key Takeaways

Overview of AI Inference Workload Challenges

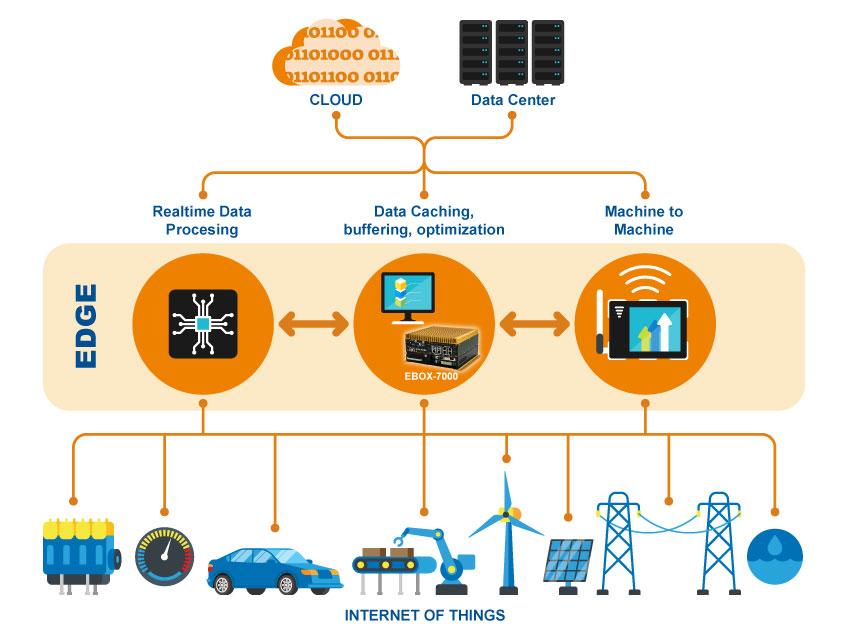

One of the key challenges faced in AI inference workloads is the high computational demands placed on hardware, leading to slow processing speeds and high power consumption. This is particularly problematic in edge computing scenarios where resources are limited. Another challenge is the need for real-time processing of data, as delays can impact the efficiency and effectiveness of AI models. In addition, the diversity of AI models and algorithms further complicates the inference workload, requiring flexible solutions.

However, Edge AI offers a promising solution to these challenges by shifting some of the computation from the cloud to the edge devices. This not only reduces the latency and bandwidth requirements but also allows for more efficient processing of AI workloads. Furthermore, Edge AI enables local decision-making, improving privacy and security by keeping sensitive data on the device. With Edge AI, organizations can overcome the computational limitations, real-time processing requirements, and flexibility needed for diverse AI models, making it a valuable tool for optimizing AI inference workloads.

Benefits of Implementing Edge AI for AI Inference

Implementing Edge AI for AI inference can offer a multitude of benefits that help solve various workload challenges. One of the key advantages is the ability to process data locally, reducing latency and enhancing real-time decision-making. By bringing AI algorithms closer to the data source, Edge AI enables faster processing speeds, making it ideal for applications requiring near-instantaneous responses.

Furthermore, Edge AI can significantly reduce bandwidth usage by processing and analyzing data on-device, minimizing the need to transmit large amounts of information to the cloud. This not only cuts down on data transfer costs but also improves overall system efficiency. Additionally, Edge AI enhances data privacy and security by keeping sensitive information localized and reducing the risk of data breaches during transmission.

Optimizing Hardware and Software for Efficient AI Inference

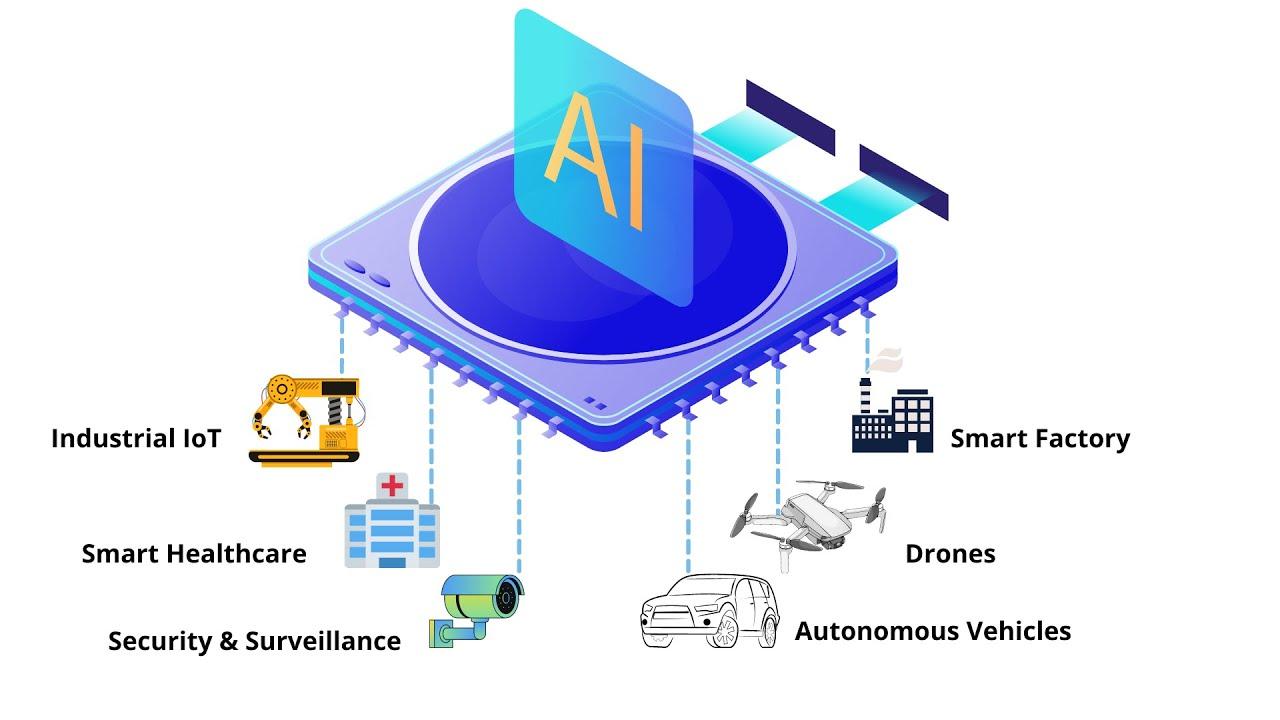

Edge AI technology is revolutionizing the way we approach AI inference workloads by addressing 5 key challenges faced by traditional setups. By leveraging hardware and software optimization, Edge AI solutions are able to deliver efficient and streamlined AI processing at the edge.

One of the main advantages of Edge AI is its ability to reduce latency, allowing for real-time decision-making without relying on cloud computing. This is critical for applications such as autonomous vehicles, security systems, and industrial automation where split-second decisions are crucial. Additionally, Edge AI helps to minimize bandwidth usage and improve data privacy by processing data locally on devices. With advancements in hardware and software optimization, Edge AI is paving the way for a new era of intelligent devices that can perform complex AI tasks with speed and precision.

Real-world Applications and Case Studies of Edge AI Success Stories

Edge AI technology has been making waves in various industries by providing innovative solutions to common challenges faced by organizations when deploying AI models. Through real-world applications and case studies, we have seen how Edge AI has successfully tackled 5 AI inference workload challenges:

- Latency: By processing data on the edge devices rather than sending it to a centralized cloud server, Edge AI significantly reduces the latency in AI inference tasks.

- Bandwidth: Edge AI minimizes the need for high bandwidth by processing data locally, making it ideal for applications in remote areas or locations with limited internet connectivity.

- Privacy: With Edge AI, sensitive data can be processed on the device itself, ensuring user privacy and data security.

- Reliability: Edge AI systems are designed to operate even in offline environments, providing a reliable solution for critical applications that cannot afford downtime.

- Scalability: Edge AI can easily scale to accommodate increasing workload demands by distributing processing across multiple edge devices for efficient resource utilization.

Q&A

Q: What are some of the challenges faced in AI inference workloads?

A: AI inference workloads often struggle with processing power limitations, latency issues, bandwidth constraints, data privacy concerns, and energy efficiency challenges.

Q: How does Edge AI help to solve these challenges?

A: Edge AI brings AI processing closer to the source of data, reducing latency and bandwidth constraints. It also allows for more efficient use of processing power and energy, while improving data privacy by keeping sensitive information on-device.

Q: How does Edge AI improve processing power efficiency?

A: By utilizing on-device processing, Edge AI reduces the need to constantly transfer data to and from the cloud, optimizing processing power efficiency and overall performance.

Q: How does Edge AI address latency issues?

A: Edge AI processes data locally, reducing the time it takes for data to travel back and forth between devices and the cloud, thus minimizing latency and improving response times.

Q: Can Edge AI help with energy efficiency concerns?

A: Yes, Edge AI can help conserve energy by reducing the amount of data that needs to be transmitted over the network, and by optimizing processing power usage through on-device computations.

Key Takeaways

the rise of Edge AI presents a promising solution to the challenges faced by AI inference workloads. By bringing the power of AI closer to where the data is generated, Edge AI offers faster processing, reduced latency, improved privacy, enhanced security, and increased efficiency. As technology continues to advance, Edge AI will undoubtedly play a vital role in shaping the future of artificial intelligence.Embracing this innovative approach holds the potential to revolutionize the way we interact with AI systems and pave the way for new possibilities in various industries. So, let’s harness the power of Edge AI and unlock the full potential of artificial intelligence.