In the ever-evolving landscape of artificial intelligence, the development of AI infrastructure has undergone a remarkable transformation in recent years. From the traditional on-site setups to the innovative realms of the cloud and edge computing, this shift has revolutionized the way we approach AI technology. Let us delve into the intricacies of this transition and explore the opportunities it presents for the future of AI development.

Table of Contents

- The Evolution of AI Infrastructure: A Shift towards Cloud Computing

- Maximizing Efficiency with Edge Computing in AI Development

- Navigating the Challenges: Strategies for a Seamless Transition to Cloud and Edge AI Infrastructure

- Key Considerations for Optimizing AI Infrastructure in the Cloud and Edge Environment

- Q&A

- To Wrap It Up

The Evolution of AI Infrastructure: A Shift towards Cloud Computing

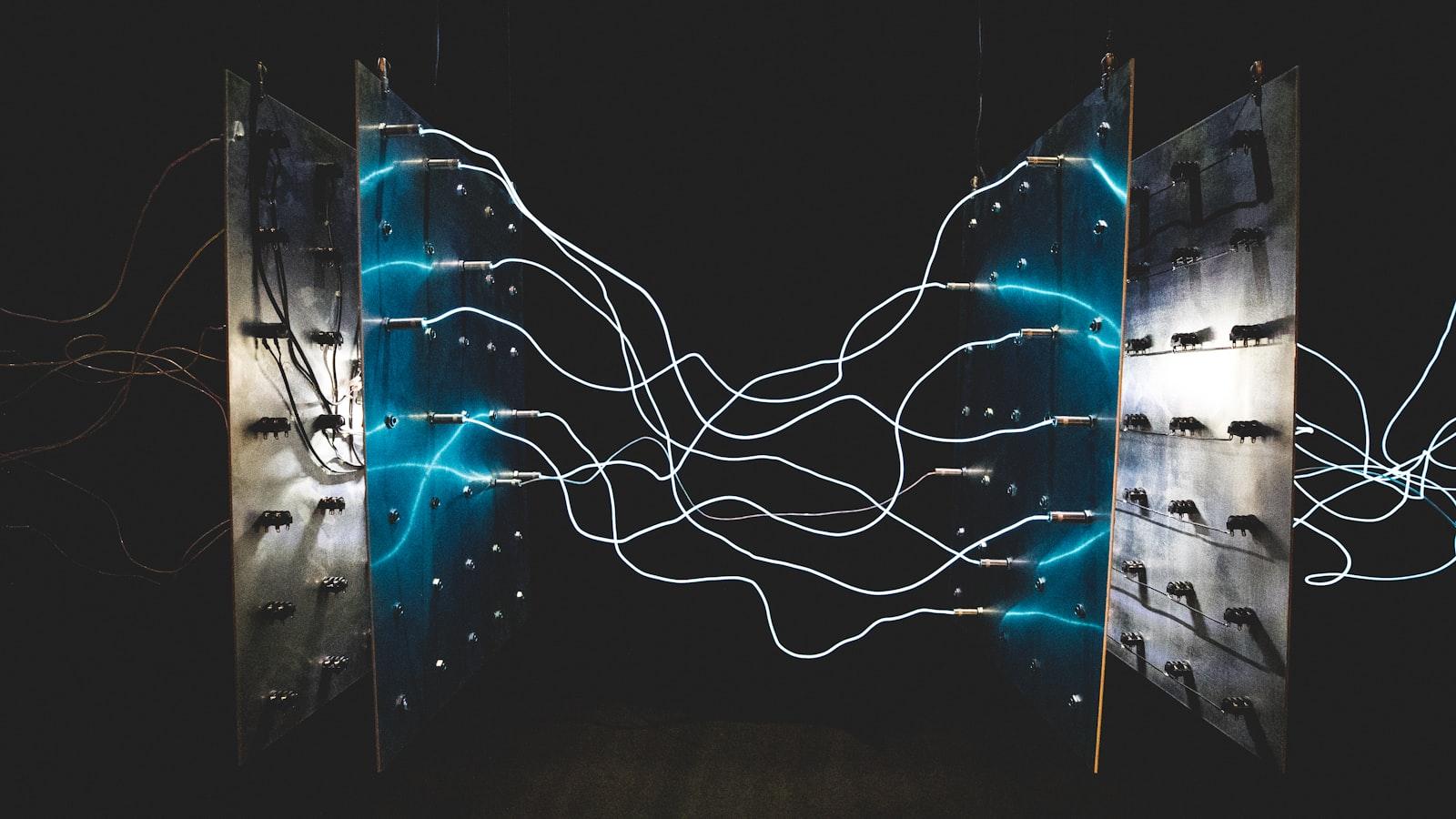

In recent years, the landscape of AI infrastructure has undergone a significant transformation, moving away from traditional on-site setups towards the cloud and edge computing. This shift has been driven by the need for more scalable, efficient, and cost-effective solutions to support the growing demands of AI applications. With the vast amount of data generated by AI systems, cloud computing offers the flexibility and scalability to handle complex computations and storage requirements.

The adoption of cloud computing in the realm of AI infrastructure has also paved the way for advancements in edge computing, where processing power is decentralized to the edge of the network. This allows for quicker data processing and reduced latency, making it ideal for real-time applications such as autonomous vehicles and IoT devices. As AI technologies continue to evolve, the combination of cloud and edge computing will play a crucial role in shaping the future of AI infrastructure.

Maximizing Efficiency with Edge Computing in AI Development

Transitioning from traditional on-site AI development to the cloud and edge computing infrastructure has become essential in maximizing efficiency and enhancing performance. By utilizing the power of distributed computing, developers can now leverage the benefits of edge computing to process and analyze data closer to the source, reducing latency and optimizing resource usage.

With edge computing, AI development is taken to a whole new level where real-time data processing and decision-making are brought closer to the end-users. This shift not only improves response times but also enables more intelligent and adaptive applications. By harnessing the capabilities of edge computing, developers can create innovative solutions that are efficient, scalable, and capable of meeting the demands of today’s fast-paced digital world.

Navigating the Challenges: Strategies for a Seamless Transition to Cloud and Edge AI Infrastructure

In today’s rapidly advancing technological landscape, the development of AI infrastructure is crucial for businesses looking to stay competitive. As companies transition from on-site data centers to cloud and edge computing environments, they must navigate a range of challenges to ensure a seamless transition. One key strategy for success is to carefully plan and implement a comprehensive roadmap that addresses the unique requirements of cloud and edge AI infrastructure.

Another important aspect to consider is the integration of advanced security measures to protect sensitive data and ensure compliance with regulatory requirements. By leveraging innovative technologies such as encryption, multi-factor authentication, and network segmentation, businesses can mitigate the risks associated with cloud and edge computing. Additionally, implementing automated monitoring and response systems can help organizations quickly detect and respond to potential security threats, ensuring the integrity of their AI infrastructure.

Key Considerations for Optimizing AI Infrastructure in the Cloud and Edge Environment

When transitioning from on-site AI infrastructure to the cloud and edge environment, there are several key considerations that organizations need to keep in mind. One important factor to consider is the scalability of the infrastructure. The cloud and edge environments offer the flexibility to scale resources up or down based on the needs of the AI applications, allowing organizations to optimize their infrastructure for performance and cost-efficiency. Implementing auto-scaling mechanisms can help dynamically adjust resources to meet changing demand, ensuring optimal performance without overspending on unnecessary resources.

Another crucial consideration is data security and privacy. As AI applications become more prevalent in various industries, protecting sensitive data is paramount. Organizations must implement robust security measures to safeguard data both in transit and at rest. Utilizing encryption techniques and access control policies can help mitigate security risks and ensure compliance with data protection regulations.

Q&A

Q: What is AI infrastructure and why is it important?

A: AI infrastructure refers to the underlying technology and resources needed to support artificial intelligence applications and algorithms. It is crucial for enabling AI systems to process and analyze large amounts of data efficiently.

Q: How has the development of AI infrastructure evolved over time?

A: Initially, AI infrastructure was mostly built and managed on-site, requiring organizations to maintain physical servers and storage systems. However, with the advancement of cloud computing and edge computing technologies, many are now transitioning to more agile and scalable solutions.

Q: What are the advantages of moving AI infrastructure to the cloud and edge?

A: Moving AI infrastructure to the cloud and edge offers several benefits, including increased flexibility, scalability, and cost-effectiveness. It also allows for easier access to advanced computing resources and tools.

Q: What challenges are associated with transitioning to cloud and edge-based AI infrastructure?

A: Despite the benefits, there are challenges to consider when transitioning to cloud and edge-based AI infrastructure, such as data security and privacy concerns, network latency issues, and potential compatibility issues with existing systems.

Q: How can organizations effectively manage their AI infrastructure in the cloud and edge environments?

A: To effectively manage AI infrastructure in the cloud and edge environments, organizations should prioritize data security, implement robust monitoring and management tools, and ensure seamless integration with existing systems. Continuous training and upskilling of staff are also essential to keep up with the rapidly evolving technology landscape.

To Wrap It Up

As we navigate the ever-evolving landscape of artificial intelligence infrastructure, the movement towards cloud and edge computing represents a pivotal shift in how we approach and utilize AI technologies. By embracing the flexibility and scalability offered by these platforms, we are able to unlock the full potential of AI in ways previously unimaginable. As we continue to push the boundaries of what is possible, it is clear that the future of AI infrastructure lies in the innovative integration of cloud and edge technologies. By staying at the forefront of these developments, we can ensure that we are equipped to harness the power of AI to drive progress and innovation in the years to come.